Redesign: From PHP/MySQL to Node/MongoDB Stack

15 October, 2013

Why I switched from a PHP/MySQL stack to MongoDB/Node.

Although still a work in progress, consider this my journal of the design and development process thus far. I also added some notes on how I tackled some pretty common problems. You guys are right.. I do need to blog more often!

It’s amazing how dated my old blog felt after just one year! I guess it just goes to show how fast the web is progressing.

Show Me The Stack

So when trying to figure out the stack for this project I really wanted to get away from the usual PHP - MySQL - Codeigniter/Modx stack that I was used to.

On the back-end:

- NodeJS: Just because I was pretty impressed by the benchmarks and I like javascript!

- Express.js: Most mature framework for node.

- MongoDB: Seems to be the most promising noSQL DB.

- Mongoose: This is a Node Module that lets you to define schemas for your MongoDB collections. I changed my mind and deleted this module. Why? Well what I like about Mongo is its schema-less nature.

- MongoSkin: This is pretty much just a wrapper for the native mongodb node driver. Less callback mayhem. Mongojs is also pretty good.

- Bower: Elegant - NPM style - Client side dependency management

On the front-end:

- Backbone.js: I really enjoy the lightweight, non-opinionated and event driven nature of this library. Plus it’s very well documented!

- NProgress: Really cool preloader plug-in that went viral on HN a few weeks ago. I will say that very rarely do I come across a plug-in that just works EXACTLY as advertised. I didn’t even have to read docs or hack it.. API is idiot proof (just 4 simple endpoints) and just does what you expect right out of the box.

- Bootstrap 3: I quickly realized that it was taking just as much effort (mostly sifting through documentation) to implement this semi-trivial layout as it would if I just wrote it myself. FYI: I later stumbled upon this template that is almost exactly what I needed. But oh well.. doing it myself was more fun.

Hosting:

- Nodejitsu: I took this for a spin one day and never got out!.. The CLI is very straghtforward..just

jitsu deploy. It has all the features you’d expect like Continuous Deploys, Snapshots w/ auto Versioning etc etc. And the mods answered all my questions very quickly on their IRC channel - MongoHQ: Honestly don’t have an opinion about this yet. It was just one of the options in NodeJitsu. It did go down today for ~20min.. But I suppose those things happen. What I like is that they immediately updated their status page and announced that they were restarting the crashed server.

And there you have it… you’ve seen my stack!

Ok, But why a single page app?

Excellent question.. Despite popular belief, it’s not all sexy animations and preloaders over here. There are indeed many cool benefits but also some additional challenges to be considered.

Added Challenges:

State Management

This is something that you don’t really need to think about when the application logic is on the server side - A visitor navigates to a specific URL, the server processes this request, does all the magic, and ultimately delivers the perfect page with the right title, meta info, active menu item, content etc. This “automatic” and predictable state management is actually not free with single page applications. State needs to be manually managed for each request. This becomes even more important when dealing with the next issue.

Crawlablility (SEO)

This is probably the single biggest reason why most people shy away from SPAs… and for good reason. Unless I were creating content for just me and my mom (Sidenote: luv mum) then I NEED search engines to index my app and all its pages properly. This is tricky because all the URLs point to same page which is then asynchronously optimized with javascript AFTER page load.

This is like not waiting for your girlfriend to get ready before snapping pictures of her and posting them on GooglePlus! Just rude! Now, in a perfect world crawlers would just WAIT for all your javascript to finish executing and THEN do their thing. However, if that were the case I wouldn’t be writing this paragraph now would I? :)

The Good ol Back Button

Again, a behavior you don’t even need to think about with standard sites. Luckily libraries like BackboneJS and AngularJS provide some very smart solutions for this. So although it is something to consider, I don’t think this is a deal breaker anymore, especially with the more widespread adoption of HTML5 pushState (~74%).

Added Benefit:

Better Performance Perceived Performance

As web developers we want to govern the user experience like a compulsive dictator. It’s difficult to do this if we relinquish total control of our kingdom each time a user makes a request for data not yet available (the blank screen of death). Single page apps allow us outsource this request to an asynchronous process and engage the user while this magic is going on (preloaders and/or partial rendering). This results in a relatively small improvement in “actual” performance (fetch size smaller) but much improved perceived performance. To me, this is the most valuable benefit and why I think single page apps have become so popular lately. #winning

The Solutions (opinionated)

Here are some of the solutions to some pretty common problems that worked out for me.

Infinite Scroll

As always, I first tried to find something pre-baked that I didn’t have to write from scratch, but all the plug-ins I found seemed too involved and hefty for this little blog. What I needed was really not that complicated; I had done this from scratch on past projects and it really wasn’t that bad. So after a couple minutes of sifting through plug-in docs I decided to just implement it myself, that way I have more control and know exactly what’s going on when debugging. You can take a look at the Github Repo for details.

I also bootstrap the first 5 documents server-side so there’s no need to make that initial ajax request each time you hit the homepage (this is actually recommended by Backbone.js).

..all models needed at load time should already be bootstrapped into place

I could explain in detail how this works in a future post (maybe even a quick screencast), just let me know in comments if interested.

Asynchronous Comments (disqus)

Rendering 40+ comments while launching a modal window makes for a not-so-smooth rendering experience. To keep modals “snappy” I had to find a way to delay the disqus reset/init. I solved this by starting to load comments after the user starts scrolling down the page. Checkout the helper methods that make this happen.

The helper methods below checks to see if we’ve already downloaded the disqus scripts, if not, we do so asynchronously. If we have, we just reset it with new configs. Here’s the official disqus ajax docs or checkout the helper source if you curious about how I put it all together.

function initDisqus(config) {

disqus_config.params = config;

if (this.loaded) {

DISQUS.reset({

reload: true

});

} else {

(function() {

var dsq = document.createElement("script");

dsq.type = "text/javascript";

dsq.async = true;

dsq.src = "http://" + disqus_shortname + ".disqus.com/embed.js";

(

document.getElementsByTagName("head")[0] ||

document.getElementsByTagName("body")[0]

).appendChild(dsq);

})();

this.loaded = true;

}

}This one works the same as the one above but for the comments counts.js instead.

function initDisqusCount() {

if (typeof DISQUSWIDGETS !== "undefined") {

DISQUSWIDGETS.getCount();

} else {

(function() {

var s = document.createElement("script");

s.async = true;

s.type = "text/javascript";

s.src = "http://" + disqus_shortname + ".disqus.com/count.js";

(

document.getElementsByTagName("HEAD")[0] ||

document.getElementsByTagName("BODY")[0]

).appendChild(s);

})();

}

}Google Analytics & Custom Events Tracking (Analytics.js)

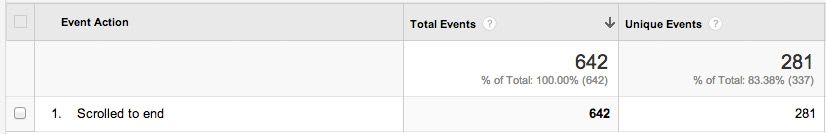

For Analytics I’m actually using the new Analytics.js script (still in beta), and so far it has worked great. I even started tracking custom user events. For example: I wanted to keep track of how many people actually scrolled to the end of the home page. Since I already had an event being triggered for this action I just added this one line of code: ga('send', 'event', 'scrollEvents', 'Scrolled to end');. Custom event tracking is not new, but the API is way more elegant!

The only real issue I had was that it didn’t work well with my old account property (visits were being tracked but not recorded). As it turns out, I had to create a new property with the “Universal Analytics” feature selected to get this to work correctly. Who would have thought? 0_o

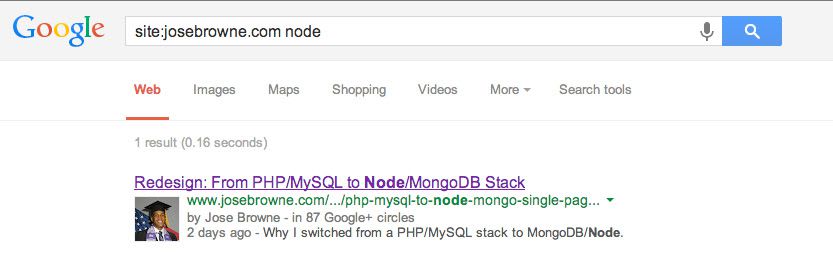

SEO

I actually delayed this article by a few days because I wanted my solution to this to be a part of this post.

Progressive Enhancement VS Pre-rendering

There’s one group that recommends Progressive Enhancement as the solution to this (among other things), and then another group on the side of generating snapshots of fully (all JS done executing) rendered pages. These pages are generated by a headless browser like PhantomJS or Selenium.

They each have their cons - Progressive Enhancement will make your application code more complex and Redirection comes with the risk of having your application banned from search engines! Guess which side I’m on?

I’m on the side of creating snapshots for two reason:

- I’m a lazy developer

- Google provides specs on how it should be done.

TL;DR - Basically involves detecting URLs containing the..?_escaped_fragment_hash fragment and responding with the fully rendered snapshots to be used for indexing.

Ok..How?

I decided to go with prerender-node, an express middleware that installs with just one line of code! It uses User-Agent string matching to detect and redirect bots to an instance of prerender which responds with PhantomJS generated snapshots. Ideally it would also use the recommended “escaped_fragment” technique for detection, but this module is less than a month old and the developers have already indicated that this is coming next! UPDATE: Already added!

You can actually test this right now by just making a GET request to the URL below and inspecting the source.

http://prerender.herokuapp.com/http://josebrowne.com/open/from-windows-to-mac-devNotice that the title, meta tags and content (which are both updated with JS) are correct. I tested this using the Google “Fetch as Google Bot” Webmaster tool and Facebook URL debugger. confirmed that this method works as advertised!

As for prerender, you can run your own instance if you want (which is easy to configure) OR use the default, which points to a prerender instance hosted by the very generous folks at CollectiveIP.

Comment / Share / Like

That’s all I got for now. Feedback/criticism/suggestions are all welcomed and encouraged! As always I’ll try and keep this up to date!

Reach me @JoseBrowneX